How to use pyspark in aws glue

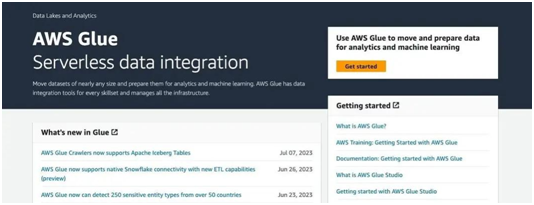

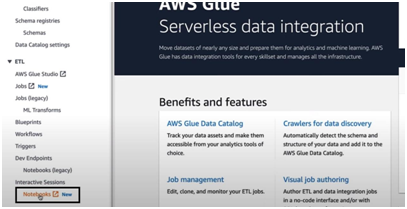

AWS Glue:

- AWS Glue is a serverless data integration service that makes it easier to discover, prepare and combine data for analytics and machine learning.

- AWS Glue provides both visual and code-based interfaces to make data integration

- Users can more easily find and access the data using AWS Glue Data Catalog.

- With AWS Glue Studio, data engineers and ETL (extract, transform, and load) developers can easily construct, execute and monitor the workflows visually.

- Data analysts and data scientists can use AWS Glue DataBrew to visually enrich, clean, and normalize data without writing code.

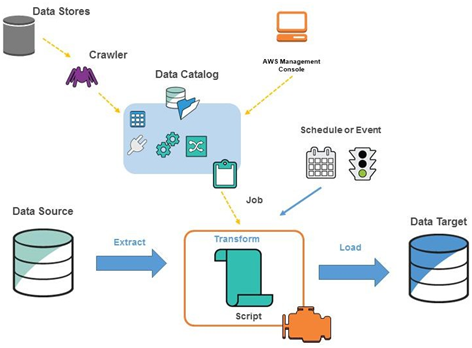

Main components of AWS Glue:

- Data Catalog, which is a central metadata repository and AWS Glue uses the Data Catalog to store metadata about data sources, transforms, and targets.

- Crawler, A program that connects to a data store,progresses through a prioritized list of classifiers to determine the schema for your data, and then creates metadata tables in the AWS Glue data catalog.

- DataBrew for cleaning and normalizing data with a visual interface.

- A flexible scheduler that handles dependency resolution, job monitoring, and retries.

AWS Glue architecture :

PySpark In AWS Glue

Pre-requisites:

- An AWS account: Sign up for an AWS account at https://aws.amazon.com/

- AWS Glue permissions: Ensure that your AWS account has the necessary permissions to create and run AWS Glue jobs.

Step 1:

- To store your sample data and Glue job artifacts, create an S3 bucket. Open the AWS Management Console, navigate to the S3 service, and choose “Create “

- Provide a unique name and select your desired

- Based on your requirements you can create additional sub folders and then upload the date files in the S3 bucket.

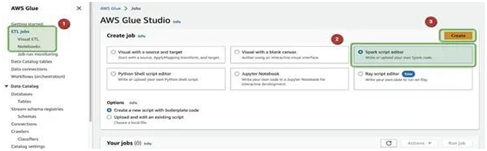

Step 2:

Access the AWS Glue service by logging into the AWS Management Console.

- select “ETL Jobs” in the left-hand menu, then select “Spark script editor” and click on “Create”

- For notebook, Select the “interactive sessions and Notebooks”

- After Create you can provide a name for the job on the top.

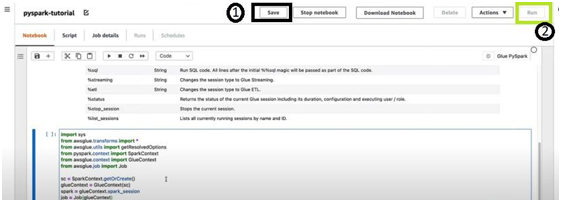

Step 3:

- Now you can write the spark code for your data processing

- Save the spark code and click “Run”

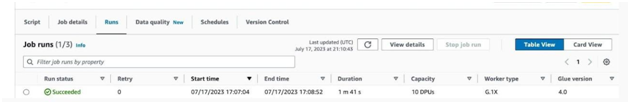

- Monitor the job run in the console. You can check the job logs andprogresstoensure the data processing is successful or not.

Benefits of using Glue for Data integration:

- AWS Glue natively supports data stored in Amazon Aurora and all other Amazon Relational Database Service (RDS) engines, Amazon Redshift, and Amazon S3, as well as common database engines and databases.

- AWS Glue is serverless. Because there is no infrastructure to provision or manage, total cost of ownership is lower.

- You pay only for the resources used while your jobs are

- AWS Glue jobs can be invoked on a schedule, on-demand, or based on an

- You can start multiple jobs in parallel or specify dependencies across jobs to build complex ETL pipelines.

Limitations:

- Grouping small files is not supported.

- It offers less customization and control over your ETL processes than some other cloud-based ETL tools.

Author Name : Thulasirajan G

Position : Data engineer